’nuff said.

No more staples!

Got rid of a plugin!

In WordPress , it’s not so much the CMS or PHP that’s usually the problem but most frequently plugins.

So whenever one can get rid of a plugin, it’s always a cause for celebration.

Yay, for I now use WordPress’s native galleries!

I’ve had surgery #hospitalporn

Back in May 29th I woke up fresh, well humoured, proceeded to have a shower and just afterwards, as I dried myself, in a matter of naught but a couple of seconds I thought an explosion had gone off in my head, the strongest headache I ever felt fired up and left me unable to do anything, having to wake up my family to take me to the big H, fearing the worse (stroke, or something worse entirely).

This post will have some gore imagery but it won’t be automatically displayed, you’ll have to click the images in order to view them. It was so traumatic to me that I need to do this as a sort of catharsis.

WARNING: it is long, and it’s mean, and VIEWER DISCRETION IS ADVISED.

Simple experiment with systemd-networkd and systemd-resolved

In my previous post, I wrote about how simple it was to create containers with systemd-nspawn.

But what if you wanted to expose to the outside network to a container? The rest of the world can’t add mymachines to /etc/nsswitch.conf and expect it to work, right?

And what if you were trying to reduce the installed dependencies in an operating system using systemd?

Enter systemd-networkd and systemd-resolved…

Firstly, this Fedora 25 host is a kvm guest so I added a new network interface for “service” were I created the bridge (yes, with nmcli, why not learn it as well on the way?)

nmcli con add type bridge con-name Containers ifname Containers nmcli con add type ethernet con-name br-slave-1 ifname ens8 master Containers nmcli con up Containers

Then, in container test, I configured a rule to use DHCP (and left in a modicum of a template for static addresses, no… that’s not my network) and replaced /etc/resolve.conf with a symlink to the file systemd-resolved manages:

cat <<EOF > /etc/systemd/network/20-default.network [Match] Name=host0 [Network] DHCP=yes # or swap the above line by the lines below: #Address=192.168.10.100/24 #Gateway=192.168.10.1 #DNS=8.8.8.8 EOF rm /etc/resolv.conf ln -s /run/systemd/resolve/resolv.conf /etc/resolv.conf

Finally, I enabled and started networkd and resolved:

systemctl enable systemd-networkd systemctl enable systemd-resolved systemctl start systemd-networkd systemctl start systemd-resolved

A few seconds later…

-bash-4.3# ip addr list dev host0 2: host0@if29: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000 link/ether 06:14:9c:9e:ac:ca brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.10.92/24 brd 192.168.10.255 scope global host0 valid_lft forever preferred_lft forever -bash-4.3# cat /etc/resolv.conf # This file is managed by systemd-resolved(8). Do not edit. # # This is a dynamic resolv.conf file for connecting local clients directly to # all known DNS servers. # # Third party programs must not access this file directly, but only through the # symlink at /etc/resolv.conf. To manage resolv.conf(5) in a different way, # replace this symlink by a static file or a different symlink. # # See systemd-resolved.service(8) for details about the supported modes of # operation for /etc/resolv.conf. nameserver 192.168.10.1

Happy hacking!

Simple experiment with systemd-nspawn containers

For this test I used Fedora 25. Your mileage might vary in other operating systems, some things may be the same, some may not be.

WARNING: you’ll need to disable selinux so to me this was merely an interesting experiment and it lead to increasing my knowledge, specially in relation to selinux + containers. Bad mix, no security, containers don’t contain, etc.

Many thanks to the nice people from #fedora and #selinux that graciously lent their time to help me when I was trying to use nspawn with selinux enabled. With their help, specially Grift from #selinux, we were actually able to run it, but only in a way I’m so uncomfortable with that I ultimately considered this experiment to be a #fail as I’m definitely not going to use them like that any time soon: there’s still a lot of work to do in order to run containers with some security. I hope the Docker infatuation leads to an universal solution towards security + containers from the good engineers at Red Hat and others involved in that work.

But it certainly was a success in terms of contributing to more experience beyond a quickly expiring benefit of familiarity with OpenVZ.

Enough words, here’s how simply it was…

Firstly, let’s setup a template from which we’re going to copy to new instances. As I’m using Fedora 25, I used DNF’s capability to install under a directory:

dnf --releasever=25 \ --installroot=/var/lib/machines/template-fedora-25 \ -y install systemd passwd dnf fedora-release \ iproute less vi procps-ng tcpdump iputils

You’ll only need the first three lines, though, the fourth was just a few more packaged I preferred to have in my template.

Secondly, you’ll probably like to do further customization in your template, so you’ll enter your container just like it was (well, is) an enhanced chroot:

cd /var/lib/machines systemd-nspawn -D template-fedora-25

Now we have a console, and the sky is the limit for what you can setup, like for instance defining a default pasword for root with passwd (but maybe you’ll not want to do this in a production environment).

For some weird reason, passwd constantly failed manipulating authentication tokens, but I solved it quickly by merely reinstalling passwd (dnf -y reinstall passwd). Meh…

I also ran dnf -y clean all before exiting the container in order to clean up unnecessary space wasted with package meta data that will be expired quickly.

When you’re done customizing, exit the container with ctrl + ]]] in about a second.

Finally, we’re ready to preserve the template:

cd template-fedora-25

tar --selinux --acls --xattrs czvf \

../$(basename $( pwd ) )-$(date +%Y%m%d).tar.gz .

cd ..

We’re now ready to create a test container and launch it in the background:

mkdir test

cd test

tar --selinux --acls -xattrs xzvf \

../template-fedora-25-20170701.tar.gz

cd ..

machinectl start test

This container will probably not be able to run services exposed outside without help but you can login into its console with machinectl login test

You’ll also have automagic name resolution from your host computer to the containers it runs if you change the hosts entry in /etc/nsswitch.conf placing mymachines between files and dns (or as you see fit if otherwise in your setup):

hosts: files mymachines dns myhostname

If you had enable ssh in your container, you’d be able to do ssh test from the host machine. Or access a web server you installed in it. Who knows.

As you saw, despite a lot of words trying to explain every step of the way, it’s excruciatingly simple.

The next article (Simple experiment with systemd-networkd and systemd-resolved) expands this example with a bridge in the host machine in order to allow your containers to talk directly with the external world.

Happy hacking!

If you want to give me a gift…

Get me a wrist watch I can place my own OS in it! 🙂 Give me an LG Watch Urbane where I can install AsteroidOS, it looks slick and it even uses Wayland.

Amazing!

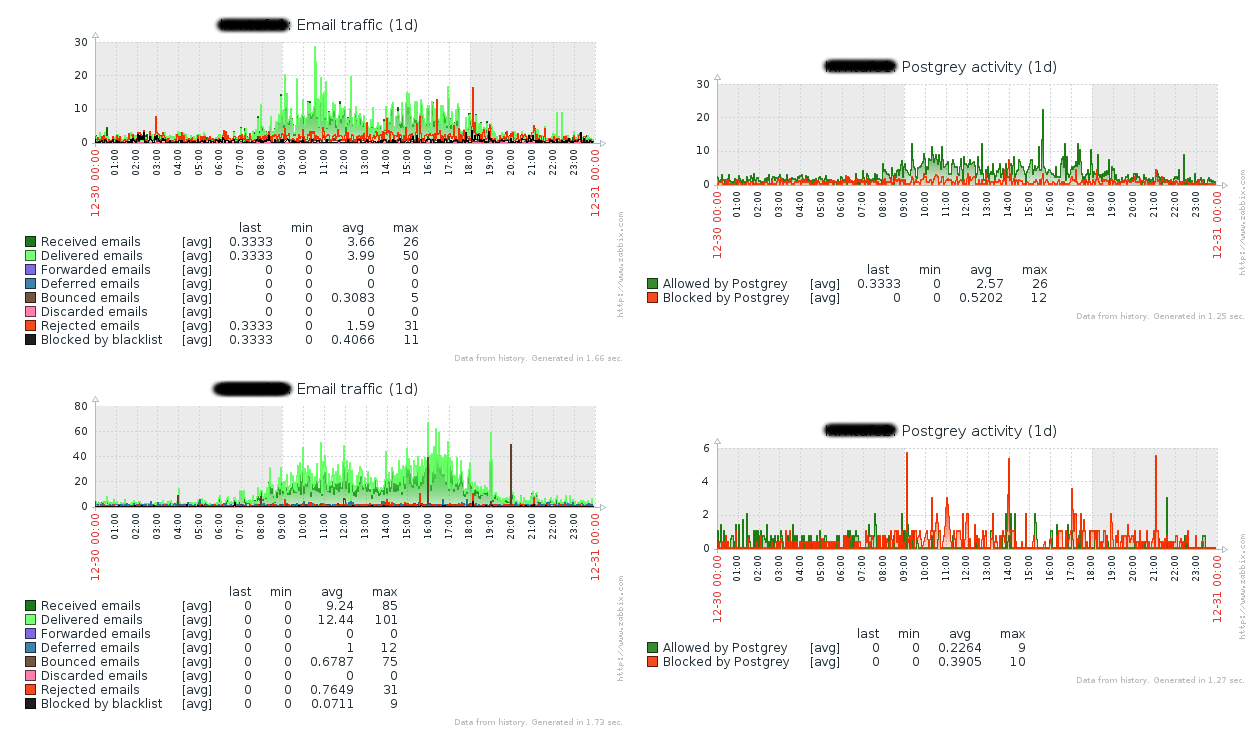

Zabbix Postfix (and Postgrey) templates

Today’s Zabbix templates are for Postfix and Postgrey (but separated in case you don’t use both).

Since I run a moderate volume set of email servers, I could probably have Zabbix request the data and parse the logs all the time, but why not do it in a way that could scale better? (yes, I know I have 3 greps that could be replaced by a single awk call, I just noticed it and will improve it in the future).

I took as base a few other examples and improved a bit upon them resulting in the following:

- A cron job selects the last entries of /var/log/maillog since the previous run (uses logtail from package logcheck in EPEL)

- Then pflogsumm is run on it as well as other queries gathering info not collected by pflogsumm (in my case, postgrey activity, rbl blocks, size of mail queue)

- Then zabbix_send is used to send the data to the monitoring server

The cron job gets the delta t you want to parse the logs, in my case it’s -1 as I’m going it per minute and that’s an argument to find … -mmin and you’d place it like this:

* * * * * /usr/local/bin/pfstats.sh -1

This setup will very likely require some adaptation to your particular environment, but I hope it’s useful to you.

Then you can make a screen combining the graphics from both templates as the following example:

Zabbix Keepalived template

I’m cleaning up some templates I’ve done for Zabbix and publishing them over here. The first one is Keepalived as a load balancer.

This template…

- requires no scripts placed on the server

- creates an application, Keepalived

- collects from the agent:

- if it is a master

- if it is an IPv4 router

- the number of keepalived processes

- reports on

- state changes (from master to backup or the reverse) as WARNING

- backup server that’s neither a router or has keepalived routing as HIGH (your redundancy is impacted)

- master server that’s neither a router nor has keepalived routing as DISASTER (your service will be impacted if there’s an availability issue in one real server as nothing else will automatically let IPVS know of a different table)

I still haven’t found a good way to report on the cluster other than creating triggers on hosts, though. Any ideas?

Up next is Postfix and, hopefully, IPVS Instance (not sure it can be done without scripts or writing an agent plugin, though. I haven’t done it yet).